A beginner’s guide to KPIs…

Background, Context and all that…

Some of you luckier readers will be wondering what on earth a KPI is. Alas, many of my readers will know, and rarely will they have a happy tale to tell about them. Let me tell you mine.

You see, to me the story of the KPI is none other than the story of the modern trend to remove the human element (that most fallible of elements) from big business. I propose that there has crept up upon us, starting when we came down from the trees and now coming to its final fruition with the industrial revolution, a situation in which the workings of our society, our organisations, governments, armies or companies, are simply too complicated to be designed or managed by any one person, whatever force his or her personality might possess.

No, the time of the one big man, the head honcho, the brain, scheming away in his tower, is over – and we enter the era, nay the epoch, of the human hive.

For now we see that in order to achieve great things, it is the ability to sort and organise mankind, rather than the ability of each man on his own, that matters most.

I could wander from my thesis awhile to describe a few side effects, for example, to point out the age of ‘middle management’ is here to stay, or to philosophize about the world where no one is actually ‘driving’ and the species is wandering like a planchette on a Ouija board and how this explains why no one seems to be able to steer us away from the global warming cliff just ahead…

But no, I will not be pulled off course, I will return to our good friend, the KPI.

So it seems we have these complex organisms, such as the venerable institution that is the company, that have evolved to survive in the ecosystem that is our global economy. Money is the blood, and people, I fancy, are the cells. And just as no particular brain cell commands us, no particular person commands a company. Just as the body divides labour among cells, so does the company among its staff. We train young stem cells into muscle, tendon and nerve. We set great troops of workers to construct fabulous machines to carry our loads just as our own cells crystallize calcium to make our bones.

Having set the scene thus I must move on, for where are the KPIs in all this?

As clever as the cells of our bodies may be, to choose depending on the whims of circumstance to turn to skin or liver or fat, that is but nothing compared with the cleverness with which the cells are orchestrated to make a cohesive body, with purpose and aim, with hopes and dreams, and of course sometimes even the means to achieve them. And the question is: how is that orchestration achieved?

Being a devout evolutionist, its is clear to me that it was by no design, but rather by the constant failure of all other permutations that led to the fabulously clever arrangement, and so it is with the organism that is the company.

It is this conviction that leads me to claim, contrary to the preaching of many business schools, that good companies are not designed, but evolve, and by a process of largely unconscious selection. Like bacteria on a petri dish, companies live or die on their choices, and by every succeeding generation, the intelligence of these choices is embroidered into the DNA of the company.

Yes, I am saying that the CEO of Microsoft, or Rio Tinto of Pfizer, is no more sure of his company’s recipe for success than any one cell in your brain is at understanding how it came to be that you can read these words. Ok, maybe that is unfair. They probably have a good shot at reproducing success in other companies, but they only understand how the company works, not why.

It is well to remember that very few of the innovations present in a modern successful company were developed within that company – the system of raising money from banks or through a system of stocks and shares, the idea of limiting liability to make these investments more palatable, of development the of modern contract of employment to furnish staff; nay the very idea that a group of people can actually get together and create the legal entity that is the ‘company’ has been developed over centuries.

Even within the typical office we see many innovations essential to run a business that could never have been ‘designed’ better by single mind – the systems to divide labour into departments – finance, marketing, sales, R&D, logistics, customer service; the reporting hierarchies and methods for making decisions; the new employee checklists, the succession plans, the new product stage-gate system, the call-report database, the annual budget, the balance sheet, the P&L – these are all evolved and refined tools that incorporate generations of brain power.

Whether it is the idea of share options or the idea of carbon copies, the list of machinery is endless and forms the unwritten DNA of the modern company. The company of today has little in common with the farmstead of 300 years ago, and indeed, just like the bullet train, it would not work too well if taken back in time 300 years. It only works in its present setting. It is part of a system – an ecosystem.

Now a business tool that has been evolving for some time, slowly morphing to its full and terrible perfection is the Key Performance Indicator or KPI.

The Key Performance Indicator

The Key Performance Indicator

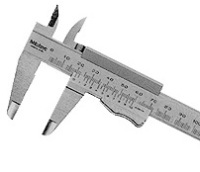

There is a saying in engineering circles: you cannot improve what you do not measure.

This philosophy accidentally leaked to the business community, probably at Harvard, which seems a great place to monetize wisdom, and so there is presently a fever of ‘measurement’ keeping middle managers in their jobs, and consultants on their yachts.

This sounds reasonable – but let’s pick at it a little.

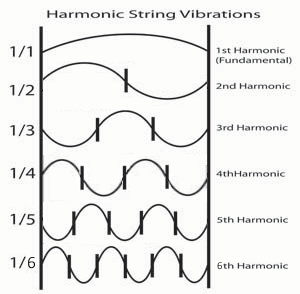

When the engineer installs a sensor in a reactor to measure its pressure, it is usually just one element in a holistic system of feedback loops that use the measurement in real-time to control inputs to that reactor. Thus we can see that measurements in themselves are not enough, there needs to be an action that is taken that affects the measured property – a feedback loop.

Likewise any action taken in a complex system will tend to have multiple effects and while you may lower the pressure in a reactor, you may raise it somewhere else, thus the consequences of the action need to be understood.

And lastly, if the pressure in the reactor is now right, the value of the knowledge has diminished, and further action will be of no further benefit.

So just like a body, or a machine, a company needs to be measured in order to be controlled and improved, and it occurs to me that these key measurements, the KPIs, need to fulfil a similar set of requirements if they are to be of value.

A few years ago I decided to write a list of KPI must-haves, which I present here:

- the property measured must be (or correlate with) a company aim (eg profitability)

- measurements need to taken to where they can be interpreted and acted upon, using actions with predictable effects, creating a closed loop

- the secondary effects of that action need to be considered

- repeat only if the benefits repeat too

Now let’s take a look at some popular KPIs and see if they conform to these requirements.

Production KPIs

There are a multitude of KPIs used the ‘shop floor’ of any enterprise, be it a toothpick factory, a bus company, a florist or a newspaper press. Some will relate to machines – up-time metrics, % on time, energy usage, product yield, shelf life, stock turns – indeed far too many to cover here, so I will pick one of my favourites.

“Availability” is a percentage measure of the fraction of time your machine (or factory or employee) is able to ‘produce’ or function. If you have a goose that lays golden eggs, then it is clear that ‘egg laying activity’ will correlate with profits, so point #1 above is satisfied, and measuring the egg laying frequency is potentially worth doing. If you then discover that your goose does not lay eggs during football matches you may consider methods to treat this, such as cancelling the cable subscription. A few trials can be performed to determine if the interference is effective, proving #2. Now all you need to do is worry about point #3; will the goose fly away in disgust at the new policy?

What about #4? Indeed you may get no further benefit if you continue to painstakingly record every laying event ad infinitum. KPIs cost time and money – they need to keep paying their way and may often be best as one-off measures; however, what if your goose starts to use the x-box?

So that is an example of when the KPI ‘availability’ may be worth monitoring, as observing trends may highlight causes for problems and allow future intervention. So surely availability of equipment is a must-have for every business!?

No. There are many times when plant availability is a pointless measure. Consider for example an oversized machine that can produce a year’s supply in 8 minutes flat. So long as it is available for 8 minutes each year, it does not matter much if it is available 360 days or 365 days. Likewise, if you cannot supply the machine with raw materials, or cannot sell all the product it makes, it will have forced idle time and availability is suddenly unimportant.

The simplest way to narrow down which machines will benefit from an availability or capacity KPI is to ask: is the machine is a bottleneck?.

For other types of issues, let’s look at some other KPIs.

Quality KPIs

Quality KPIs

Quality KPIs are interesting. Clearly, it is preferable to ship good products and have happy customers. Or is it?

In any production process, or indeed in any service industry, mistakes will be made. Food will spoil, packaging will tear, bits will be left out. It is now fashionable to practice a slew of systems designed to minimise these effects: to detect errors when they are made, to re-check products before they are shipped, to collect and collate customer complaints and to feed all this info back into an ever tightening feedback loop called ‘continuous improvement‘.

KPIs are core to this process and indeed KPIs were being used in these systems long before the acronym KPI became de rigueur. To the quality community, a KPI is simply a statistic which requires optimization. The word ‘Key’ in KPI not only suggests it represents a ‘distillation’ of other numerous and complex statistics, but implies that the optimising of this particular number would ‘unlock’ the door to a complex improvement.

Thus, a complex system is reduced to a few numbers, and if we can improve those numbers, then all will be well. This allows one to sleep at night without suffering nightmares inspired by the complexity of one’s job.

The reject rate is a common quality KPI – it may encompass many reasons for rejection, but is a simple number or percentage. It is clearly good to minimize rejects (requirement #1) and observing when reject rates rise may help direct investigations into the cause thereof satisfying requirement #2.

However, from rule #2 we see that this KPI is only worth measuring if there will be follow-up: analysis and corrective action. This must not be taken for granted. I have visited many plants that monitor reject and when asked why, they report that head office wants to know. What a shame. Perhaps head office will react by closing that factory some time soon.

The failure to use KPIs for what they are intended is perhaps their most common failure.

Another quality KPI is the complaint rate. Again, we make the assumption that complaints are bad, and so if we wish to reduce these we should monitor them.

Hold the boat. How does the complaint rate fit in with company aims? We already know that mistakes happen, but eliminating quality issues is a game of diminishing returns, so rather than doggedly aiming for ‘zero defect’ we need to determine what complaint rate really is acceptable.

So here is another common KPI trap. Some KPIs are impossible to perfect, and it is a mistake to set the target at perfection. Think of your local train service. Is it really possible for a train system to run on time, all the time? The answer is an emphatic no!

The number of uncontrolled inputs into a public transit system – the weather, the passengers, strike actions, power outages and the like will all cause delays, and while train systems can allow more buffer time between scheduled stops to cater to such issues, this type of action actually dilutes other aspects of service quality (journey frequency and duration). Add to that finally the fact that a train cannot run early so losses cannot be recovered.

The transport company will of course work to prevent delays before they occur, and lay on contingency plans (spare trains) to reduce impacts, but the costs and practicality mean that any real and meaningful approach needs to accept a certain amount of delays will be inevitable. A train company could spend their entire annual profit into punctuality and they would still fall short of perfection.

So it is with most quality issues, the law of diminishing returns is the law of the land. Thus the real challenge is to determine at what point quality and service issues actually start to have an impact on sales and cashflow. This is another common pitfall of the KPI…

The correlation between the KPI and profitability is rarely a simple positive one, especially at the limits.

Some companies get no complaints. Is this good? No, often it is not! This company may be spending too much money on QC. The solution here is to work with and understand the customer – what issues would they tolerate and how often? If you did lose some customers by cutting quality, would the financial impact be greater than the savings?

However, and on the other hand, don’t make the opposite mistake: once a reputation for poor quality is earned, it is nearly impossible to shake.

Financial KPIs

Financial KPIs

As companies become bigger, there is a tendency to divide tasks according to specialized skill and training. Thus it can happen that the management of a big mining company may never set foot in a mine, may not know what their minerals look like, nor may they know how to actually dig them up or how to make them into anything useful. In other words, they would be useless team members after a nuclear apocalypse.

However, this is no different from our brain which is little use at growing hair, digesting fat or kicking footballs.

It is thus necessary that the organism (the company) develops a system to map in a flow of information from its body to its mind and then another mapping to take the decisions made in that mind and distribute them to the required points of action.

Indeed to blast in a quarry, or to kick the football are indeed best done by organs trained and capable and no less important than the remote commanders in the sequence of events.

And just as our bodies have nerves to transmit information about the position of our lips and the temperature of the tea to our mind, so the company has memos, telephones and meetings. And KPIs are the nouns and subjects in the language used.

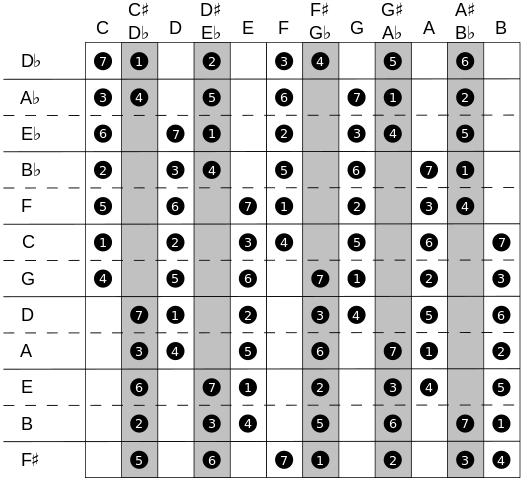

Furthermore, some KPIs need to be further distilled and translated from the language of the engineer (Cpk), the quality manager (reject rate) or the plant manager (units shipped) to that of the accountant (revenue) , the controller (gross margin) and eventually that of the general manager and the shareholder (ROI). This is perhaps the main duty of middle management, bless their cotton socks.

Unfortunately, the mapping of everyday activities to financial KPIs is fraught with danger. The biggest concern comes from the multiple translation issue. That is to say, KPIs can suffer from a case of Chinese whispers, losing their true meaning along the way, resulting in the worse result: a perverse incentive.

Yes, ladies and gentlemen, this does happen.

Let’s say you want to improve your cash situation. You may choose to change the terms in your sales contracts for faster payment, in essence reducing the credit you allow your customers. This may have the desired effect, lowering the KPI called “receivables” and this looks good on the balance sheet – but let’s look at requirement #3 in the KPI “must have” list. What are the ripple effects of this move? It is clear this will not suit some of your customers, who, considering recent economic trends, probably also want to improve their cash situation; thus you may lose customers to a competitor willing to offer better terms.

And so we see the clear reason for perverse incentives is the consideration of KPIs individually instead of collectively. There has to be a hierarchy upon which to play KPIs against one another. Is revenue more important than margin? Is on-time shipping a part-load preferable to shipping “in full” a little late?

So we see again that the systems used to distill company indicators the choice of which decisions are centralized and which are localized need to be developed and constantly refined using an iterative process. The art of translating the will of shareholders into a charter or mission statement and then translating that into targets for sales, service and sustainability is a task far too complex to perfect at first attempt.

KPI Epic Fails

While on the subject of KPIs I cannot resist the opportunity to bring to mind a few fun examples of KPIs gone badly wrong.

The Great Hanoi Rat Massacre

The Great Hanoi Rat Massacre

The French administration in Hanoi (Vietnam) were very troubled by the rat population in Hanoi around the start of the last century, and knowing as they did about rat’s implication in the transmission of the plague, set about to control the population. A simple KPI was set – “number killed” and payments were made to the killers on this basis. There was immediate success with rats being brought in by the thousand and then the tens of thousand per day. The administration was pleased though somewhat surprised by the sheer number. There surprise gradually transformed into disbelief as time wore on and the numbers failed to recede.

You guessed it. The innovative residents of Hanoi had started to breed rats.

The Magic Disappearing Waiting List

Here’s a more recent example from the National Health Service in the UK (the NHS).

A health service is not there to make a profit, it is there to help the population, to repair limbs, to ease suffering, to improve the length and quality of life – and to do this as best it can on a finite budget. So the decisions on where to invest are made with painstaking care – and needless to say, KPIs are involved. Not only big picture KPIs like life-expectancy, or cancer 5-year survival rates, but also on service aspects, such as operation or consultation waiting times.

It will therefore not be surprising to you to learn that the NHS middle management started to measure waiting times and develop incentives to bring these down, or even eliminate them. This sounds very reasonable, does it not?

Now ask yourself, how do you measure a waiting time? Say a surgery offers 30 minute slots – you may drop in and wait for a vacant slot, but as the wait may exceed a few hours it is just as well to book a slot some time in the future and come back then. So one way to measure waiting times is to measure the mean time between the call and the appointment. This of course neglects to capture the fact that some patients do no actually mind the wait and indeed may choose an appointment in two weeks time for their own convenience rather than due to a lack of available slots. Lets put that fatal weakness aside for the minute as I have not yet got to the amusing part.

After measurements had been made for some time, and much media attention had been paid to waiting times, the thumb-screws were turned and surgeries were being incentivized to cut down the times, with the assumption they would work longer hours, or perhaps create clever ‘drop-in’ hours each morning or similar.

Pretty soon however, the results started coming in, the waiting times as some surgeries were plummeting! Terrific news! How were they doing it?

Simple: they simply refused to take future appointments. They had told their patients: call each morning, and the first callers will get the slots for that day. This new system meant nobody officially waited more than a day. Brilliant! Of course it is doubtful the patients all felt that way.

How The Crime Went Up When It Went Down

How The Crime Went Up When It Went Down

If you work for the police, you will be painfully aware that measuring crime is difficult. And so it is with the measurement of many ‘bad’ things – for example medical misdiagnoses or industrial safety incidents.

Let’s look at workplace safety; while it may be fairly easy to count how many of your staff have been seriously injured at work, it is much harder to record faithfully the less serious safety incidents – or more specifically, the ones that might have been serious, but for reasons of sheer luck, were not. The so-called ‘near-misses’.

Now to the problem. Let us say you are a fork-lift driver in a warehouse and one day, it a moment of inattention, you knock over a tower of heavy crates. Luckily, no-one was around and more luckily, no damage was done. So what do you do? Do you immediately go to the bad-tempered foreman with whom you do not get along and tell him you nearly killed someone and worse, nearly caused him a lot of extra work? Or do you carefully stack the crates again and go home for dinner?

The police suffer a similar predicament. The reporting of a crime is often the last thing someone wants to do, especially if they are the criminal. Now let’s say you are an enterprising young administrator just starting out in the honourable role as a crime analyst at the Met. You want to tackle crime statistics in order to ensure the most efficient allocation of funds to the challenges most deserving thereof. Do bobbies on the beat pay for themselves with proportionately reduced crime? Does the ‘no broken windows‘ policy really work? Does the fear of capital punishment really burn hot in the mind of someone bent on murderous revenge? Such are the important questions you would wish to answer and you have a budget to tackle it. What do you do?

You set out to gather statistics of course, and then to develop those tricky little things, the KPIs.

Now let’s say a few years pass, and after some success, you are promoted a few times and your budget is increased. Yay! You have always wished for more money to get more accurate data! Another few months later and the news editors are aghast with the force. Crime is up! Blame the police – no, blame to left – no blame the right! Blame the media! It’s video games – no, it’s the school system!

No, actually it’s a change in the baseline. The number of crimes recorded most likely went up because the effort in recording them went up. The crime rate itself may well have gone down.

The opposite can also happen. Say you run a coal mine and you will be given a huge bonus if you can get through the year with a certain level of near misses. Will you really pressure your team to report every little thing? I think not.

So the lesson here is: watch out for a KPI where you want the number to go one way to achieve your longer term aims, but where the number will also depend on measurement effort.

The Profit Myth

Most KPIs are dead dull. The very mention of KPIs will elicit groans and be followed swiftly by a short nap. The ‘volume of sales’ KPI is no different. The issue with the volume KPI is probably made worse by the clear fact that actually thinking about KPIs is a strong sedative. Surely selling more is good? Well, if you can fight through the fog of apathy, and actually think about this for a second, it is easy to see this is often not so.

To see why, it is important to understand price elasticity. It if often true that lowering price will increase sales, so an easy way to achieve a target ‘volume’ (number) of sales is to drop price. That way to can make your volume KPI look good, as well as your revenue KPI, and so long as there is still a positive margin on all the units sold your earnings KPI (aka profit!) will see upside. There can’t possibly be any downside, can there?

Of course, astute financial types can find this fault easily, and perhaps the question of ‘how’ would make a good one for a job interview. It turns out more profit is not always good (seriously!). Whether more profit is really worth taking depends on the ratio of the increased earnings to the increased investment that is needed to make them.

There is another KPI I mentioned earlier the “ROI” or return on investment. When I discussed it I implied that it was only of interest at the GM level, but really the reason only the GM tends to see this KPI is because it is difficult to calculate, and often only the GM has the clout to get it – but it should be considered by all. To me, it is the king of KPIs for a publicly listed company. And it turns out the ROI may actually go down with increasing profit.

If making more widgets requires no further investment, then the maths is easy, but that is rarely true.

The question is this: is it better to have a large ‘average’ business or a smaller one with higher profit margins? It turns out, from an investor’s perspective, that the latter is fundamentally preferable.

The ROI treats a business a bit like a bank account: asking what interest rate does it offer? The business should be run to give the highest interest rate‘(%), not the highest interest ($). It is always possible to get more interest from a bank account – just put more money in the bank.

Translating a Mission Statement into company KPIs

Translating a Mission Statement into company KPIs

I mentioned above that the ROI is a pretty darn good KPI – so can we use it alone? Of course not. Recall that the KPIs are mere numbers we measure that try to tell us how we are doing against the company mission statement, and while the company mission statement may unashamedly describe vast profits as a goal, this is almost universally not the whole story.

The organism that is the modern company has one particular need besides profit today, and that is profit tomorrow. The ROI does not capture this need, so more KPIs are required, and trickier ones – ones that capture sustainability, morale, innovation and reputation (brand value). This is the turf of the mission statement.

Even though the more cynical of my readers will know the mission is usually ‘make lots of dosh’, and anything beyond that is window dressing, I would venture that the mission statement is the first step to figuring out which KPIs to put first.

Let’s dissect a few examples. In my own 2-minute analysis I decided they fall into four types:

- To appeal to employees:

McGraw Hill:

We are dedicated to creating a workplace that respects and values people from diverse backgrounds and enables all employees to do their best work. It is an inclusive environment where the unique combination of talents, experiences, and perspectives of each employee makes our business success possible. Respecting the individual means ensuring that the workplace is free of discrimination and harassment. Our commitment to equal employment and diversity is a global one as we serve customers and employ people around the world. We see it as a business imperative that is essential to thriving in a competitive global marketplace.

- To appeal to customers (aka the unabashed PR stunt)

A recent one from BP:

In all our activities we seek to display some unchanging, fundamental qualities – integrity, honest dealing, treating everyone with respect and dignity, striving for mutual advantage and contributing to human progress.

I couldn’t leave this one out from Mattel:

Mattel makes a difference in the global community by effectively serving children in need . Partnering with charitable organizations dedicated to directly serving children, Mattel creates joy through the Mattel Children’s Foundation, product donations, grant making and the work of employee volunteers. We also enrich the lives of Mattel employees by identifying diverse volunteer opportunities and supporting their personal contributions through the matching gifts program.

- To appeal to investors. This is usually a description of how they are different or what they will do differently in order to achieve big dosh.

CVS:

We will be the easiest pharmacy retailer for customers to use.

Walt Disney:

The mission of The Walt Disney Company is to be one of the world’s leading producers and providers of entertainment and information. Using our portfolio of brands to differentiate our content, services and consumer products, we seek to develop the most creative, innovative and profitable entertainment experiences and related products in the world.

- For the sake of it – some companies clearly just made one up because they thought they had to, and obviously bought a book on writing mission statements:

American Standard’s mission is to “Be the best in the eyes of our customers, employees and shareholders.”

Now a great trick when analysing the statement of any politician, and thus any mission statement, is to see if a statement of the opposite is absurd. In other words, if a politician says “I want better schools”, the opposite would be that he or she wants worse schools, which is clearly absurd. Thus the original statement has no real content, it is merely a statement of what everyone would want, including the politician’s competitors. Thus to judge a politician, or a mission statement, it is important to look not at what they say, but at what they say differently from the rest.

Mission statements seem rather prone to falling into the trap of stating the blindingly obvious, and as a result become trivial, defeating the point. Such is the case with American Standard. Of course you want to be the best. And of course it is your customers, employees and shareholders who you want to convince. Well no kidding!

So discounting those, we can see that a good mission statement will focus on difference. If we look at CVS, their mission is to be easy to use. This may seem like a statement of the obvious, but I don’t think it is – because they have identified a strategy they think will get them market share. Now they can design KPIs to measure ease of use. This is the sort of thinking that led to innovations like the ‘drive-thru’ pharmacy.

If we look at Disney, you can go further. “…[To] be one of the world’s leading producers and providers of entertainment…” OK, so they admit being #1 is unrealistic, and if you want to be taken seriously, you need to be realistic. But if you are one of many, how do you shine? “Using our portfolio of brands to differentiate” They realise they can sell a bit of plastic shaped like a mouse for a lot more than anyone else can. There is a hidden nod to the importance of brand protection. So KPIs for market share and brand awareness fall right out. They finish off with “the most creative, innovative and profitable entertainment” Well you can’t blame them for that.

If we look at Disney, you can go further. “…[To] be one of the world’s leading producers and providers of entertainment…” OK, so they admit being #1 is unrealistic, and if you want to be taken seriously, you need to be realistic. But if you are one of many, how do you shine? “Using our portfolio of brands to differentiate” They realise they can sell a bit of plastic shaped like a mouse for a lot more than anyone else can. There is a hidden nod to the importance of brand protection. So KPIs for market share and brand awareness fall right out. They finish off with “the most creative, innovative and profitable entertainment” Well you can’t blame them for that.

Very rarely, you see a mission statement that not only shows how the company intends to make money, but may also inspire and make pretty decent PR. I like this one from ADM:

To unlock the potential of nature to improve the quality of life.

I have no idea how to get a KPI from that though!

Summary

In this article I have tried to illustrate how measuring a KPI is much like taking the pulse of a body – it’s a one-off health check, yes, but more importantly it can be a longer term measurement of how your interventions are affecting company fitness in the longer term.

I also try to describe some common pitfalls in the use of KPIs and presented four simple tests of their value:

- the KPI must correlate to a company’s mission

- the KPI must form part of a corrective feedback loop

- perverse incentives can be avoided by never considering any single KPI in isolation

- repeat the treatment only if the benefits repeat too

I have personally used this checklist (with a few refinements) over the years to some good effect in my own industry (minerals & materials) and though I am confident many of my readers will have more refined methods, I live in hope that at least one idea here will of benefit to you.

No small part of the doubling in life expectancy was due to vaccines.

No small part of the doubling in life expectancy was due to vaccines.